A recent twitterstorm involving claims about the ascendance of theories of “panpsychism” and the damage that poor philosophizing can do to the study of consciousness has gotten me thinking about consciousness as a scientific study again. I (some 5 and a half years ago) wrote about the sorry treatment consciousness gets from mainstream neuroscientists. At the risk of becoming the old curmudgeon I rallied against then, I’d like to revisit the topic and (hopefully) add some clarity to the critiques.

I don’t know enough about the state of academic philosophy of mind to weigh in on the panpsychism debate, which seemed to set this all off (though of course I do intuitively believe panpsychism to be nonsense…). But the territorial arguments over who gets to talk about consciousness (philosophers v scientists essentially) is relevant to the sub-debate I care about. Essentially, why do neuroscientists choose to use the C-word? What kind of work counts as “consciousness” work that couldn’t just as well be called attention, perception, memory etc work? As someone who does attention research, I’ve butted up against these boundaries before and always chosen to stay clearly on my side of things. But I’ve definitely used the results of quote-unquote consciousness studies to inform my attention work, without seeing much of a clear difference between the two. (In fact, in the Unsupervised Thinking episode, What Can Neuroscience Say About Consciousness?, we cover an example of this imperceptible boundary, and a lot of the other issues I’ll touch on here).

Luckily Twitter allows me to voice such questions and have them answered by prominent consciousness researchers. Here’s some of the back and forth:

I think consciousness is the subject of more worry & scrutiny because it claims to be something more. If it’s really frameable as attention or memory, why claim to study consciousness? Buzz words bring backlash

— Grace Lindsay (@neurograce) February 1, 2018

hi @neurograce, but i don’t think @trikbek means consciousness is framed / frameable as attention or memory. he meant it is frameable in the same ways, i.e. as cognitive mechanisms. the mechanisms are different, so we need to work on it too. when done right it isn’t just buzz

— hakwan lau (@hakwanlau) February 1, 2018

Sure, I understand that meaning. But many times I see consciousness work that really is indistinguishable from e.g. attention or psychophysics. Seems like word choice & journal is what makes it consciousness (& perhaps motivation for that comes from its buzziness)

— Grace Lindsay (@neurograce) February 1, 2018

that’s right. since the field is still at its infancy, a lot of work that isn’t strictly about the phenomenon tags along. but i think at this point we do have enough work directly about the difference between conscious vs unconscious processing, rather than attention / perception

— hakwan lau (@hakwanlau) February 1, 2018

I took @neurograce‘s critique to mean that there seem to be conceptual gaps in making the distinction between conscious processing vs. vanilla attention/perception. Is the distinction crystal clear, @hakwanlau?

— Venkat Ramaswamy (@VenkRamaswamy) February 3, 2018

@VenkRamaswamy . yes, to my mind it is clear.

https://t.co/YLq5pNurng

— hakwan lau (@hakwanlau) February 3, 2018

And with that final tweet, Hakwan links to this paper: What Type of Awareness Does Binocular Rivalry Assess?. I am thankful for Hakwan’s notable willingness to engage with these topics (and do so politely! on Twitter!). Nevertheless I am now going to lay out my objections to his viewpoint…

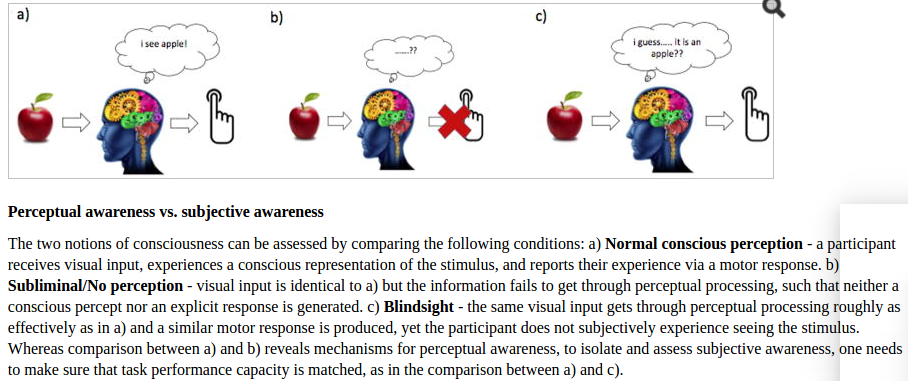

Particularly, I’d like to focus on a distinction made in that paper that seems to be at the heart of what makes something consciousness research, rather than merely perception research, and that is the claimed difference between “perceptual awareness” and “subjective awareness”. Quoting from the paper:

Perceptual awareness constitutes the visual system’s ability to process, detect, or distinguish among stimuli, in order to perform (i.e., give responses to) a visual task. On the other hand, subjective awareness constitutes the visual system’s ability to generate a subjective conscious experience. While the two may seem conceptually highly related, operationally they are assessed by comparing different task conditions (see Fig. 1): to specifically assess subjective awareness but not perceptual awareness, one needs to make sure that perceptual performance is matched across conditions.

And here is that Figure 1:

The contents of the thought bubble I assume (crucially) are given to the experimenter via verbal report, and that is how one can distinguish between (a) and (c).

To study consciousness then, experimental setups must be designed where the perceptual awareness and task performance remains constant across conditions, while subjective awareness differs. That way, the neural differences observed across conditions can be said to underly conscious experience.

My issue with this distinction is that I believe it implicitly places verbal self-report on a pedestal, as the one true readout of subjective experience. In my opinion, the verbal self-report by which one is supposed to gain access to subjective experience is merely a subset of the ways in which one would measure perceptual awareness. Again, the definition of perceptual awareness is: the visual system’s ability to process, detect, or distinguish among stimuli, in order to perform (i.e., give responses to) a visual task. In many perception/psychophysics experiments responses are given via button presses or eye movements. But verbal report could just as well be used. I understand that under certain circumstances (blindsight being a dramatic example), performance via verbal report will differ from performance via ,e.g., arm movement. But scientifically, I don’t think the leap can be made to say that verbal report is anyway different than any other behavioral readout. Or at least, it’s not in anyway more privileged at assessing actual subjective conscious experience in any philosophically interesting way. One way to highlight this issue: How could subjective awareness be studied in non-verbal animals? And if it can’t be, what would that imply about the conscious experience of those animals? Or what if I trained an artificial neural network to both produce speech and move a robotic arm. Would a discrepancy between those readouts allow for the study of its subjective experience?

Another way to put this–which blurs the distinction between this work and vanilla perceptual science–is that there are many ways to design an experiment in perception studies. And the choices made (duration of the stimulus, contextual clues, choice of behavioral readout mechanism) will definitely impact the results. So by all means people should document the circumstances under which verbal report differs from others, and look at the neural correlates of that. But why do we choose to call this subset of perceptual work consciousness studies, knowing all the philosophical and cultural baggage that word comes with?

I think it has to do with our (and I mean our, because I share them) intuitions. When I first heard about blindsight and split brain studies I had the same immediate fascination with them as most scientists, because of the information I believed they conveyed about consciousness. I even chose a PhD thesis that let me be close to this kind of stuff. And I still do intuitively believe that these kinds of studies can provide interesting context for my own conscious experience. I know that if I verbally self-reported not seeing an apple that I would mean that as an indication of my subjective visual experience, and so I of course see why we immediately assume that it could be used as a readout of that. I just think that technically speaking there is no actual way to truly measure subjective experience; we only have behavioral readouts. And of course we can imagine situations where, for whatever reason, a person’s verbal self-report isn’t a reflection of their subjective experience. And basing a measure solely on our own intuitions and calling it a measure of consciousness isn’t very good science.

It’s possible that this just seems like nitpicking. But Hawkan himself has raised concerns about the reputation of consciousness science as being non-rigorous. To do rigorous science, sometimes you have to pick a few nits.

====================================================

Addendum! A useful followup via Twitter that helps clarify my position